Ingesting data from a Kafka topic

The 23.11 release of Data Bridge introduced the ability to ingest updates from a Kafka topic. This major capability update greatly simplifies the task of integrating CRM with an Apache Kafka-based data hub, consuming data updates and de-serialization of Apache Avro messages into JSON.

The ability ingest data from a Kafka topic can be enabled and disabled during deployment by setting the isDataIngestionActive property in the environment file. To be able to register consumer with Kafka Consumer and process messages from Kafka Consumer, set the value to true.

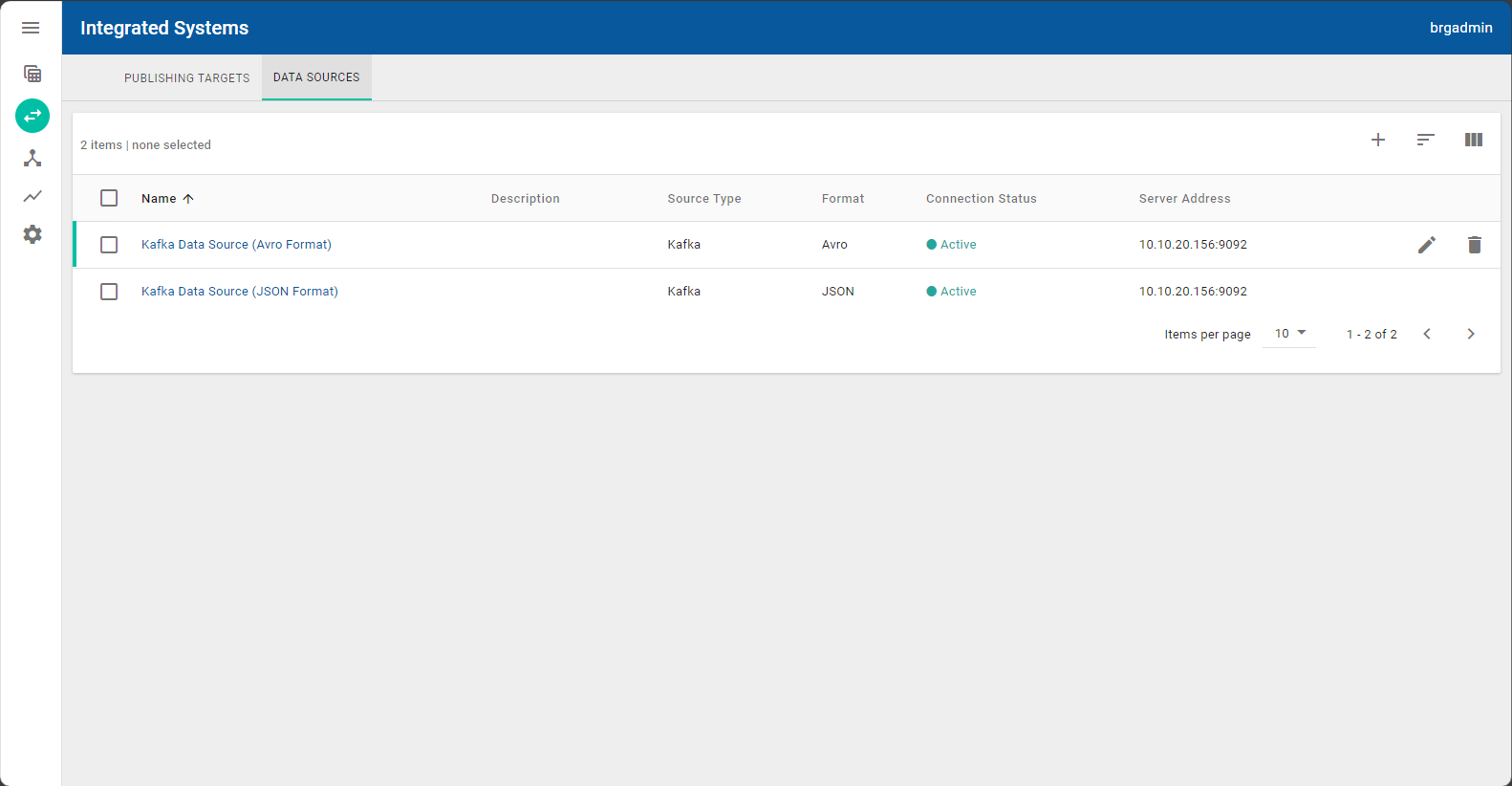

Information about the Kafka topics is managed using the Data Sources tab on the Integrated Systems workspace ![]() .

.

To subscribe for event streaming updates from a Kafka topic, navigate to the Data Sources tab and click the Add Source button ![]() on the top of the data table. Complete the Create Data Source dialog that appears to identify the Kafka topic.

on the top of the data table. Complete the Create Data Source dialog that appears to identify the Kafka topic.

To view or modify any additional details about the source, click the Edit button and review or update the information in the Edit Data Source dialog.

You can specify either JSON or Apache Avro formatting for the data source. Apache Avro deserialization is supported.

To delete a data source, click the Delete button.

After creating the data source, you can publish the data updates to a NexJ CRM or a third-party HTTP API. The publishing targets are defined on the Publishing Targets tab on the Integrated Systems workspace.

Data Ingestion configurations can be built by a Data Bridge administrator at run-time using the Generic Views tab on the View Explorer workspace.